Machine learning and its implications

In the recent days, computer processors have become really powerful and things considered impractical in the old days are getting real.

One of these things is machine learning. The whole concept of ML (machine learning) is to use statistical measures to extract patterns from data.

I know you might have heard a lot of fuzz about it, but the subject isn't a new subject at all. In fact machine learning is very old, but it appeared in kind of gradient instead of a direct cut.

The term 'machine learning' itself is coined in 1959 by Arthur Samuel, so that stuff is really pretty old, isn't it? So you might ask yourself, "Why is it only now that machine learning is appearing again to the public?" Well...

You see, the problem is that back then, computers were pretty slow, so handling huge amounts of data was nearly impossible, and can definitely not be implemented in 'simple' software, but today, the technology of processors has got much better and doing machine learning has become so easy and practical that you can do it from your own house using your own personal computer without needing to visit any lab of some sort.

Today, the most common approaches for applying machine learning are neural networks, so I'm gonna talk about them here. The concepts behind neural networks are pretty easy and straight forward: you just imitate the brain. That doesn't sound easy, does it? ^_^ Well, practically it isn't, but the theory is quite accessible to anyone.

The human brain is made up of cells called neurons (neural cells) connected together. The connections are called synapses, and the key thing about them is that they are not all identical (the connections, not the cells (although those aren't identical either, but that's not the point here.)) So you can use these differences to store patterns. In fact, that's most probably how the actual brain does it.

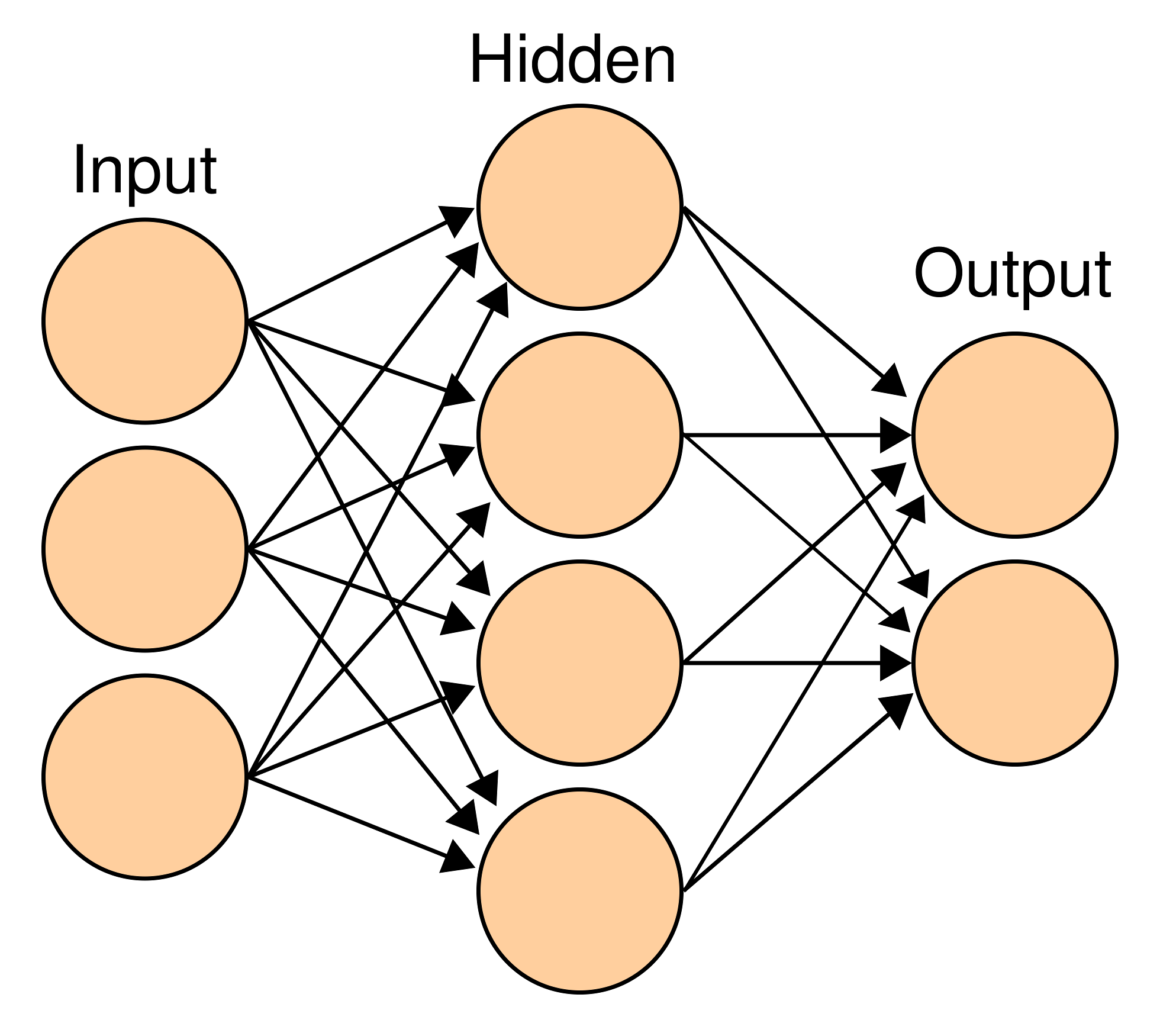

Let me explain a little bit how information is processed in neural networks. The following is considered a working neural network.

So you have layers of neurons connected together with synapses: Image attribution: By en:User:Cburnett [GFDL or CC-BY-SA-3.0], via Wikimedia Commons

Image attribution: By en:User:Cburnett [GFDL or CC-BY-SA-3.0], via Wikimedia Commons

The first layer of neurons (the one on the left is where the data is input,) the middle layer is called an intermediate layer and will be used for processing the data better (more on that later, ) and the last layer on the right is the output layer where the system responds with answers to the input. The arrows represent the synapses.

When input is given to the first layer (input is given as a number; anything else will be transformed into a number somehow) will progress to the second layer as follows: the number input in a certain neuron -- the input is usually given as many different inputs; for instance, an image consisting of many pixels is input to the system as a huge list of numbers each one of them representing a single pixel or more correctly, one color channel of each pixel -- will be multiplied by the "strength" of each synapse (technically called the weight of the neuron) and sent to the next neuron in the next layer. So for instance, if the synapse is 'weak', the number input might get smaller. On the other hand, if the synapse is 'strong' the number might get larger. The rest of the neurons in the layer will do exactly the exact same thing.

In the next layer, the neurons will just add all the numbers they received and do the same thing as the neurons in the first layer did but this time, they will send the numbers to the output layer and that will be the output.

So as you can see, the key thing here is how strong each synapse is, and that will determine what the output will be for a certain input. With a neural network that is complex enough, you will be able to represent quite abstract ideas such as the image of a person in these simple structures.

Now, this post is meant to be just a light intro to machine learning, so I won't get too heavy into the details of it right now. If you are impatient for that, then I recommend you check the videos of 3Blue1Brown. I have found them to be particularly clear, useful and fun. Still in the context of videos, I recommend you watch the presentation I did at school about the subject. It is in Lebanese Arabic, but I have written English subtitles for it too. Additionally, if you are really, really into it, then you might want to check the wonderful course at Coursera by Andrew Ng.

Resources to learn more

(check out my Github repository for more and for contribution (thank you ^_^))